What is the accuracy and precision of a universal testing machine, and how is it measured

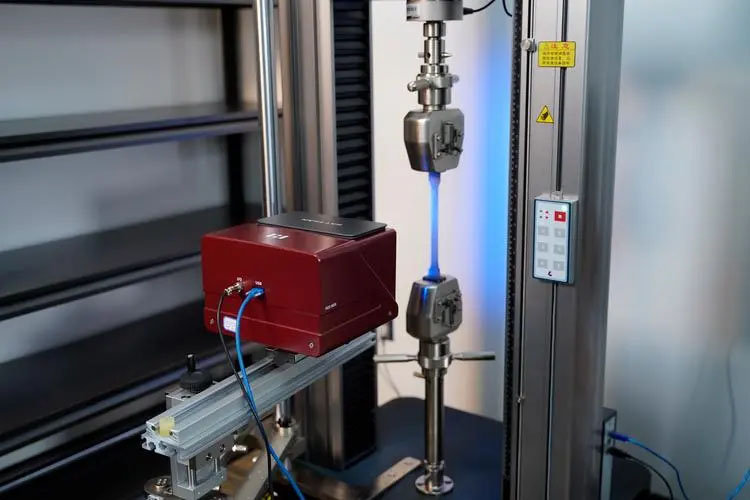

Universal Testing Machines (UTMs) are indispensable in the field of materials testing, providing vital data on the mechanical properties of materials such as metals, polymers, composites, and ceramics. These machines are used extensively in quality control, research, and development to assess properties like tensile strength, compressive strength, elongation, and flexural strength. The reliability of the data generated by UTMs depends significantly on their accuracy and precision. This article explores these concepts in detail, discussing their importance, how they are measured, and the standards that guide these measurements.

Understanding Accuracy and Precision

Accuracy, refers to the degree of closeness between a measured value and the true or accepted reference value. High accuracy in a UTM ensures that the results of the material tests are close to the actual properties of the material being tested. For example, if a material’s actual tensile strength is 500 MPa, a UTM with high accuracy will measure a value close to 500 MPa.

Precision, also known as repeatability, refers to the consistency of measurement results when tests are repeated under the same conditions. High precision means that if a UTM is used to test the same sample multiple times, the results will be very similar, showing minimal variation. Precision is crucial for reliability and reproducibility of test results.

Importance of Accuracy and Precision

Accuracy and precision are fundamental to ensuring that the data from UTMs are reliable and can be trusted for critical decision-making in product design, material selection, and quality assurance. Inaccurate or imprecise data can lead to significant errors in engineering applications, potentially causing product failures, safety hazards, and financial losses.

Measurement and Calibration of Accuracy and Precision

To ensure the accuracy and precision of UTMs, regular calibration and verification are necessary. This process involves several steps and adheres to established international standards.

Force Calibration

1.Load Cells: Load cells are the heart of UTMs, responsible for measuring the applied force. They convert mechanical force into an 2.electrical signal which is then interpreted by the machine’s software.

3.Calibration Standards: Force calibration of UTMs is guided by standards such as ASTM E4 (Standard Practices for Force Verification of 4.Testing Machines) and ISO 7500-1. These standards outline the procedures for verifying the accuracy of force measurements.

Calibration Process:

1.Application of Known Weights: The UTM is subjected to known weights or calibrated force sensors across its entire operating range.

2.Comparison and Adjustment: The readings from the UTM are compared to the known values. Any discrepancies are noted, and adjustments are made to the machine’s settings to align the readings with the true values.

3.Documentation: The calibration results are documented, including the deviations and the corrections applied.

4.Accuracy Specification: Typically, a high-quality UTM has a force measurement accuracy of ±0.5% of the reading down to 1/1000th of the load cell capacity.

Displacement Calibration

1.Extensometers: These devices measure the deformation or strain of the material during testing. They need to be calibrated to ensure accurate displacement measurements.

2.Calibration Standards: Displacement calibration follows standards such as ASTM E83 (Standard Practice for Verification and Classification of Extensometer Systems) and ISO 9513.

Calibration Process:

1.Application of Known Displacements: Precise micrometers or gauge blocks are used to apply known displacements to the extensometer.

2.Comparison and Adjustment: The readings from the UTM’s displacement sensors are compared to the known displacements, and necessary adjustments are made.

3.Verification: The calibration is verified across the entire range of the extensometer to ensure consistency.

4.Accuracy Specification: A typical specification for displacement accuracy is ±0.5% of the reading or ±0.01 mm, whichever is greater.

Verification and Maintenance

Regular verification and maintenance are essential to ensure that a UTM continues to perform accurately and precisely over time. This includes:

1.Routine Verification: Regular checks using standard weights and displacement devices to verify that the UTM remains within its specified accuracy and precision limits.

2.Environmental Control: Maintaining a controlled environment to minimize the effects of temperature, humidity, and vibration, which can impact measurement accuracy and precision.

3.Software Updates: Ensuring that the UTM’s software is up to date, as software errors can affect data integrity.

4.Component Inspection: Regular inspection and maintenance of mechanical components to prevent wear and tear that could affect performance.

The accuracy and precision of Universal Testing Machines are critical for obtaining reliable and consistent material test data. Accuracy ensures that the measurements reflect the true properties of the material, while precision ensures that repeated measurements under the same conditions yield consistent results. Regular calibration, adherence to international standards, and routine maintenance are essential practices to maintain the performance of UTMs. By ensuring high accuracy and precision, UTMs provide trustworthy data that is crucial for material selection, product development, and quality assurance in various industries.